In the field of training large-scale AI models, the challenges related to fault recovery and monitoring continue to be significant. Conventional practices often require complete restarts of processes if a single component fails, which results in downtime and high operating costs. As the training clusters expand, identifying and solving critical problems, such as stuck GPUs and numerical instabilities, requires a complex and customized monitoring code.

In response to this challenge, Amazon has introduced SageMaker HyperPod, an innovative solution designed to accelerate the development of AI models using hundreds or even thousands of GPUs. This tool incorporates built-in resilience, which allows reducing model training time by up to 40%. The HyperPod Training Operator improves resilience in Kubernetes workloads through precise recovery techniques and custom monitoring capabilities.

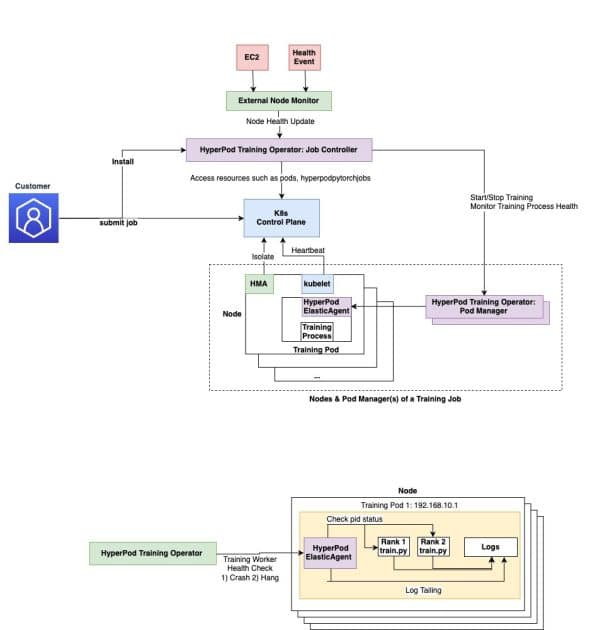

Implemented as an add-on for Amazon Elastic Kubernetes Service (EKS), the operator efficiently manages distributed training on large GPU clusters. The architecture follows the Kubernetes Operator pattern and breaks down into key components such as the Job controller and the Pod manager, achieving more efficient resource management.

The Amazon SageMaker HyperPod enables granular process recovery, which means that instead of restarting entire jobs after a failure, only the affected processes are restarted. This reduces recovery times from minutes to seconds, significantly improving operational efficiency. Additionally, the system detects unhealthy nodes and restarts jobs or processes due to hardware issues, eliminating the need for manual solutions.

Among its additional benefits are centralized monitoring of the training process and the efficient allocation of ranks, enabling more effective fault detection. Problems such as paused jobs and performance degradation can be identified using simple YAML configurations.

To implement this operator, a detailed guide is provided on how to deploy and manage machine learning workloads with Amazon SageMaker HyperPod. The installation is fairly quick, taking 30 to 45 minutes, provided that the necessary resources and permissions in AWS are verified.

The installation process includes steps such as adding additional components, creating a HyperPod cluster, and managing PyTorch-based training jobs through Kubernetes manifests. It is important, after the training is completed, to clean up the resources created to avoid unnecessary charges, including the removal of HyperPod jobs, container images, and installed plugins.

This innovative proposal promises to successfully address the challenges faced by organizations in the development of large-scale artificial intelligence models, providing a robust solution to common problems. By following the setup instructions and exploring the example trainings, organizations can optimize the use of this tool to benefit their specific applications within the field of artificial intelligence.