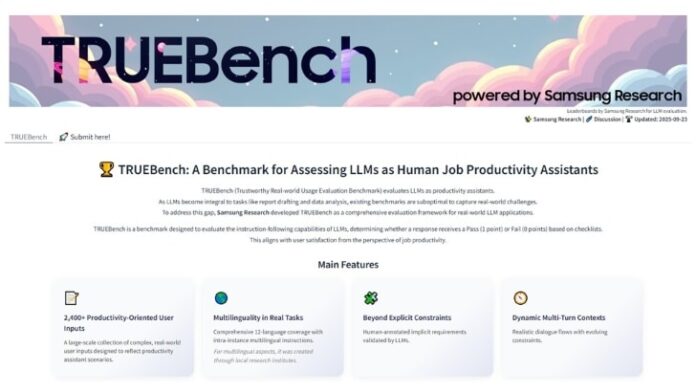

Samsung Electronics has launched TRUEBench, an innovative evaluation tool designed by Samsung Research to measure the productivity of artificial intelligence in work environments. This standard offers a comprehensive set of metrics to evaluate the performance of large language models (LLMs) in real-world productivity applications, including diverse dialogue scenarios and multilingual conditions.

TRUEBench responds to the growing need to measure the effectiveness of LLMs in common business tasks, such as content generation, data analysis, summarization, and translation. With 10 categories and 46 subcategories, this benchmark includes 2,485 test sets in 12 languages, enabling interlingual scenarios. This distinguishes it from other standards that are usually limited to simple question-and-answer structures and to a single language.

Paul (Kyungwhoon) Cheun, CTO of Samsung Electronics' DX Division, emphasized the importance of the company's practical AI experience, stating that TRUEBench could establish a new standard for evaluation and reinforce Samsung's technological leadership in this field.

TRUEBench's evaluation approach goes beyond merely measuring the accuracy of the responses, considering that users' instructions do not always explicitly reflect their intentions. The system addresses these implicit conditions through a collaborative process between humans and AI, ensuring the accuracy of the evaluation criteria, avoiding subjective bias, and guaranteeing consistency.

Furthermore, the TRUEBench data and classifications are available on the open-source platform Hugging Face, where users can compare up to five different models. This transparency in performance is complemented by details about the average length of responses, providing a comprehensive view of the efficiency and effectiveness of AI models in the current market.