Amazon has launched a new tool designed to revolutionize the management of artificial intelligence workloads on its SageMaker platform. The new functionality of SageMaker HyperPod, focused on task governance, promises to optimize efficiency and reduce network latency during training, something crucial for the demanding tasks of generative AI.

This advancement enables a more effective allocation of computing resources in Amazon EKS (Elastic Kubernetes Service) clusters, which facilitates more effective use across different teams and projects. Thanks to this tool, administrators can now better manage accelerated computing and define priority policies for tasks, which increases resource utilization. Thus, organizations can focus on innovating in generative AI and accelerate time to market, without having to worry about the details of resource allocation.

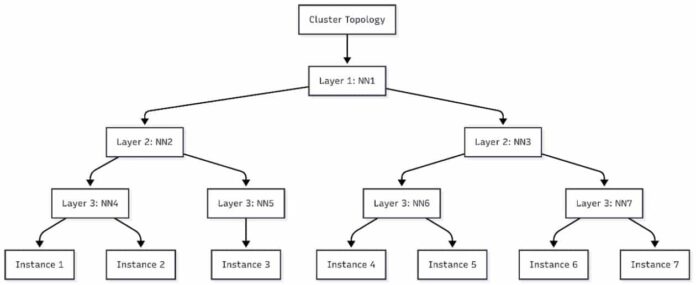

Generative AI workloads require intensive communication between Amazon EC2 instances (Elastic Compute Cloud). Here, latency can be a major obstacle. By organizing data centers into hierarchical organizational units, processing time improves significantly, since the instances within the same organizational unit have faster response times.

SageMaker HyperPod benefits from using EC2 topology information, which reflects how the nodes are physically arranged in the network. This enables a reduction in latency through the optimization of workload placement, thereby improving training efficiency.

With this topology-aware programming, HyperPod manages to improve communication within the network and manage tasks more efficiently. The use of topology labels enables optimizing resource usage, crucial for demanding AI workloads.

Data scientists, who often deal with the complexity of training and deploying models on accelerated computing instances, can now have better visibility and control over the placement of training instances. The implementation of this scheduling requires first confirming the topological information of the cluster nodes and then executing specialized scripts.

The requirements for adopting this technology include having an EKS cluster and a SageMaker HyperPod cluster, both enabled for topology information, along with other technical factors. It is also possible to visualize this information through specific commands.

Finally, SageMaker HyperPod offers multiple methods to schedule tasks with topology awareness, whether by modifying Kubernetes manifest files or using its command-line interface.

In conclusion, this SageMaker HyperPod innovation promises to transform the management of generative AI workloads, offering greater efficiency and reducing network latency. Users are invited to explore this solution and share their experiences.