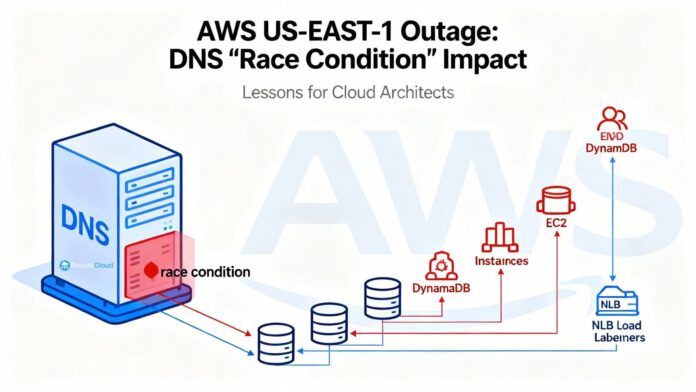

The recent outage in the AWS North Virginia region (us-east-1), which affected numerous services on October 19 and 20, was caused by a race condition in the automation that manages Amazon DynamoDB's DNS. This error triggered a massive impact, affecting critical services such as IAM, EC2, Lambda, and many others, as the regional DynamoDB endpoint resolution failed.

AWS stopped automation globally and had to manually restore the correct DNS state. From that moment on, DynamoDB-dependent services and the proper functioning of the Network Load Balancer (NLB) recorded significant disruptions due to errors in the resolution and propagation of the network.

The problem lay in a fault within the system that manages DNS plans, which, by operating with old and new data simultaneously, left the endpoint without addresses, requiring manual intervention to correct the status in Amazon Route 53.

Additionally, the launch of new EC2 instances was another challenge, due to the collapse of the systems that manage the infrastructure, causing an accumulation of queues and delays in restoring the service. Services such as Lambda and STS also suffered due to the direct or indirect dependence on DynamoDB.

The lessons learned and the announced measures emphasize the need to design architectures that take regional failures into account, urging companies to consider multi-region configurations to mitigate the impact of future outages. They highlight practices such as differentiating between data and control planes, properly managing the TTLs in DNS, and anticipating failure scenarios through drills and detailed runbooks.

AWS faces the challenge with measures to strengthen its systems and prevent similar situations in the future, which reinforces the importance of resilient planning by the companies that depend on these critical infrastructures.

More information and references in Cloud News.